How 2026 Will Redefine Data and AI Observability: Building Transparent, Reliable, and Trustworthy Systems for the Next Generation of Intelligent Enterprises

The adoption of AI and data science among organizations across industries is skyrocketing. Enterprises are increasingly pushing for digital and AI transformation, as well as the integration of advanced machine learning models into their business processes. With this push, the need for better visibility is also rising.

As we approach 2026, data and AI observability will become the foundation of trustworthy digital ecosystems, transcending from mere luxury. Organizations now don’t need to consider if they need observability or not, but focus on how deeply they can embed it into every layer of their AI development and deployment processes.

Changing Focus from Building AI to Observing AI

For the last few years, the major focus has been on designing and integrating machine learning models and AI systems to automate decision pipelines. Whether it is predictive maintenance in the manufacturing industry or generative AI for marketing, the role and use of AI have grown exponentially. However, these systems are still considered ‘black boxes,’ i.e., data goes in, predictions come out, and teams are often unclear of why a model behaves in a certain way.

This lack of transparency can be a huge risk. For example, if the data pipelines silently break or the model starts hallucinating, the results can be significantly disastrous, like bias in output, breach in regulatory compliance, and even costly business decisions.

Therefore, the next generation of AI maturity isn’t about building smarter systems but about observing them with the same priority as its uptime and performance.

This is why data and AI observability become important.

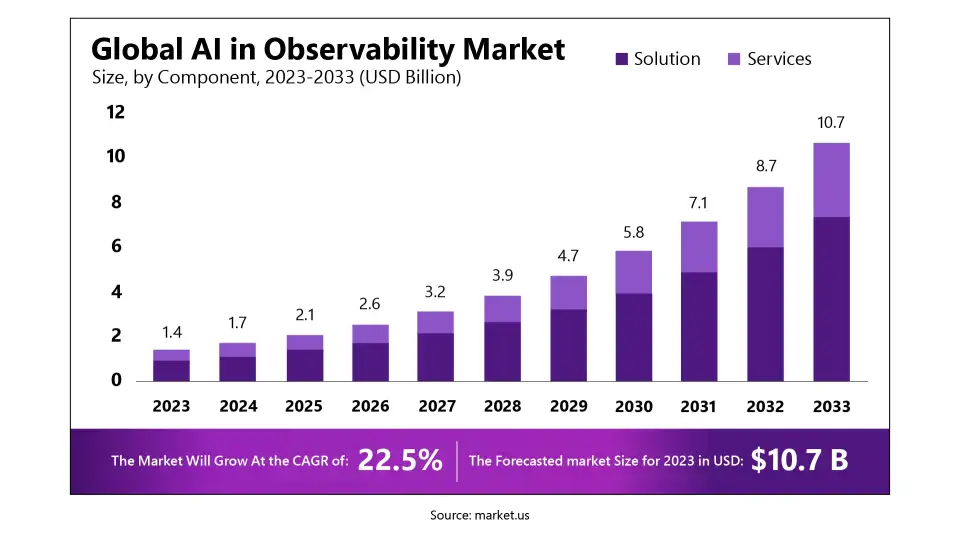

The global AI in Observability market is projected to surge from $2.1 billion in 2023 to approximately USD 10.7 billion by 2033, expanding at a robust CAGR of 22.5% between 2025 and 2033. (Source: Market.us)

What is Data and AI Observability?

Recent studies indicate a rapid surge in the adoption of AI agents across industries. According to a KPMG survey, 88% of organizations are either exploring or actively piloting AI agent initiatives. Meanwhile, Gartner projects that by 2028, over one-third of enterprise software applications will feature agentic AI — the core technology that powers intelligent, autonomous agents.

Data and AI observability, in simple terms, refers to the continuous practice of monitoring the quality, reliability, and performance of data pipelines, data warehouses, AI models, and the surrounding infrastructure supporting them.

It is more than just monitoring systems, but tracking major metrics like latency or error rates, and covers:

Monitoring these capabilities helps professionals understand how data and AI behave in real-time and why.

Why is 2026 a Turning Point?

The timing for this shift from building to observing is not coincidental. Now, several industry and technological trends are converging to make this year important for observability. Here’s why:

The growth of generative AI has also led to an explosion in the number of machine learning models deployed. Today, organizations have to manage thousands of data flows and models across hybrid and multi-cloud environments. This makes it difficult to track them manually. Thus, automated and machine learning powered observability platforms will be the new effective solution.

Consumers and users are becoming increasingly aware of their data privacy and security. In the high-stakes industries like finance, healthcare, and public policy, AI plays a huge role, and there is an absolute need for trust in it. Stakeholders want explanations along with outputs. Observability will help get clear explainability and enhance user trust.

Monitoring tools from data quality (Monte Carlo and Bigeye) to AI observability solutions (Arize, Fiddler, WhyLabs) are maturing fast. As we move towards the future, these integrated platforms that provide unified monitoring for data and AI will become mainstream.

Organizations report up to 80% less data downtime, 30% faster monitoring coverage, 70% broader data quality checks, and up to 50% savings in data engineering costs with observability tools like Monte Carlo (Source: Monte Carlo).

Apart from these, the regulations around AI governance and transparency are becoming stricter, which requires machine learning models to be transparent and explainable. Also, operational downtime, data corruption, and inaccurate responses from models can also cost organizations millions of dollars. This can be minimized with effective observability.

Pillars of Data and AI Observability

Organizations that want to adopt clear data and AI observability must focus on these five essential pillars:

Most of the insights and predictions depend on the quality of data. With observability systems continuously checking for anomalies in the accuracy and quality of data, it becomes easy to detect issues before they escalate.

Models evolve over time, or rather, they degrade. Factors like data drift, concept drift, and feedback lead to deterioration of the model’s accuracy. Therefore, an ongoing evaluation is needed to ensure the prediction is accurate, fair, and stable.

End-to-end lineage maps help data science professionals understand how data moves through systems, right from ingestion to transformation to modeling. This is important for debugging failures and meeting compliance requirements.

Some of the most advanced observability platforms use AI for anomaly detection, correlating signals across datasets and models, and identifying root causes. This helps teams to act on intelligent and context-aware alerts faster instead of drowning in dashboards.

Most importantly, observability is not just about technology but a mindset. Professionals, including data engineers, ML engineers, analysts, business leaders, etc., must work together to ensure reliability and trust. Adopting a culture of transparency is, in fact, the real driver.

How to Build an Observability Strategy for 2026

Organizations need to have a continuous observability approach rather than an adopt-and-forget approach. This requires:

Data and AI observability is a team effort, and therefore, teams across departments should collaborate and share ownership.

Entering the future, observability will be more than simply looking into intelligent assurance. The future is really full of possibilities. For example, we can see AI and machine learning models that will not just report a decline in their accuracy but also suggest retraining with specific datasets. Data pipelines will automatically switch to backup sources like a trusted data warehouse when they detect anomalies, and professionals can view a real-time reliability score for every analytics asset in production.

The future of observability really sounds fascinating, doesn’t it?

The Final Thoughts!

AI maturity is more than just building and deploying efficient models. It requires continuous monitoring and observability. With data becoming highly complex and data and privacy regulations becoming stricter, organizations are looking for clear observability, and 2026 will be the year remembered for this. Data and AI observability ensure the models are transparent, accountable, and resilient.

Are you looking for ways to master AI and data science skills? Learn how to build, deploy, and maintain transparent and explainable models with top data science certifications from USDSI® and enhance your credibility in the industry.

This website uses cookies to enhance website functionalities and improve your online experience. By clicking Accept or continue browsing this website, you agree to our use of cookies as outlined in our privacy policy.