Managing environments is often the toughest part of data science. Models might work perfectly on one machine, yet fail to run on a different machine due to missing packages or version mismatches. Issues with running a model on another machine can be frustrating, waste time, and hinder collaboration.

Docker overcomes these issues by creating very thin containers that package the code, dependencies, and configuration into one single portable image. This means consistency and reproducibility, and improves the speed of executing data science workflows.

As per Modar Intelligence, the global application container market is anticipated to grow from USD 10.27 billion by 2025 to USD 29.69 billion by 2030, growing at a CAGR of 23.64%. The growth is indicative of the increased adoption of containerization technologies like Docker by organizations across many industry sectors.

The following blog covers five actionable steps toward mastering Docker for your data science projects, from the basics to scaling for production.

What is Docker?

Applications can be automatically deployed inside portable, lightweight containers using the open-source Docker platform. Whether your application is running on a developer's laptop, a test server, or a cloud platform, a container ensures that the code, libraries, dependencies, and configuration are all packaged together.

Because a container shares the host system's operating system kernel while maintaining process isolation, it can be thought of as a miniature virtual machine that is faster and much smaller.

As an example, suppose you used your local system to train a machine learning model. When your colleague attempts to run it on their computer, they encounter missing libraries or version discrepancies. By reproducing the same environment across computers, Docker containers circumvent this.

Practical Applications of Docker in Modern Development

Docker’s container-based platform enables highly portable workloads that can run seamlessly on a developer’s laptop, on-premises servers, or cloud environments. This portability simplifies application deployment and allows businesses to dynamically manage workloads, scaling applications up or down as needed.

By isolating applications within containers, Docker ensures consistent performance, faster rollout, and efficient resource utilization across diverse environments.

Understanding Docker for Data Science Through Steps

Docker simplifies the journey from development to production by breaking down complex workflows into manageable stages. These steps ensure consistency, scalability, and reproducibility across your data science projects.

Step 1: Installing and Setting Up Docker

Docker must be correctly installed on your system before you can begin building data science containers. The engine that Docker offers enables you to package and run apps uniformly across various devices, including servers, laptops, and the cloud.

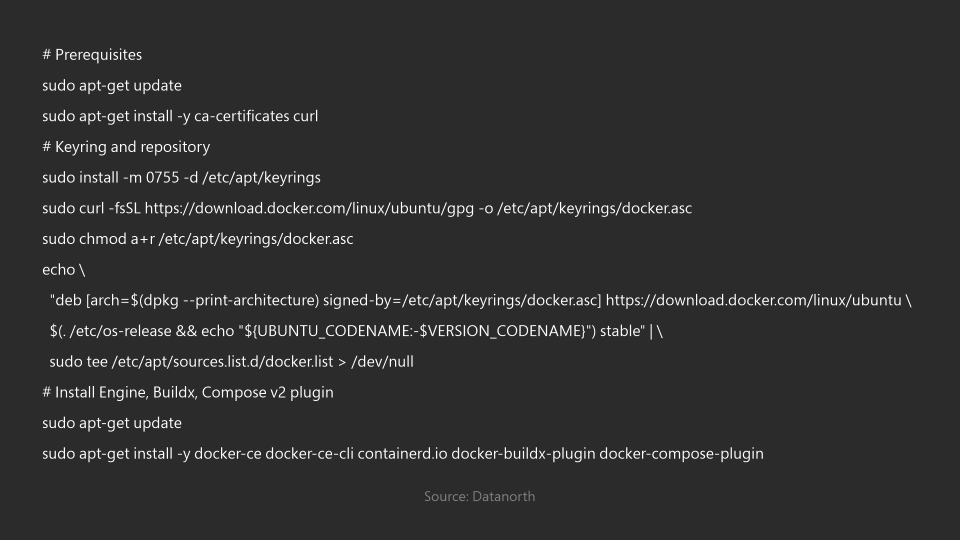

Installing Docker on Linux systems, such as Ubuntu, entails adding the official repository, setting up the engine, and adjusting user permissions so that sudo isn't always required. Docker Desktop offers a user-friendly graphical user interface that includes all the necessary components for Windows and macOS.

Once Docker is installed, verify it with:

docker --version

docker run hello-world

This confirms your system is ready to start building containers.

Step 2: Building a Reproducible Environment with Dockerfiles

Reproducibility is at the core of data science. It should be feasible to retrain a model under the same circumstances tomorrow, after it has been trained today. Dockerfiles, which are blueprints that specify the environment in which your code runs, can help with that.

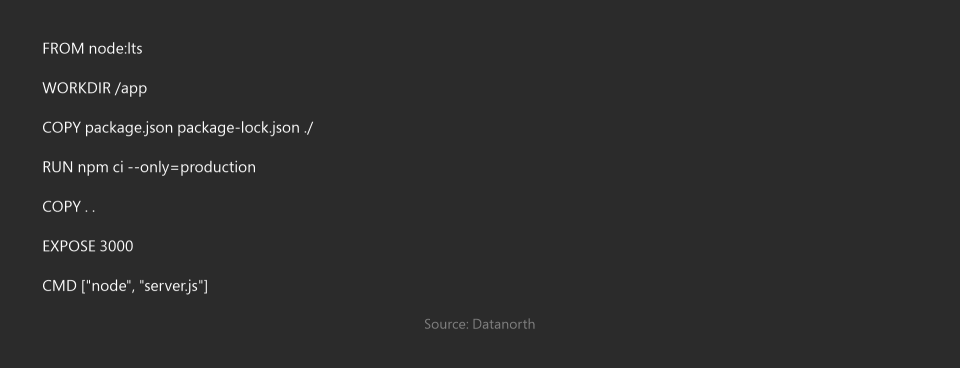

A standard Dockerfile defines the command to execute, installs dependencies, copies in your source code, and specifies a base image. You can make sure that your environment is portable and locked down by doing this.

The same reasoning holds true for data science workflows, even though the screenshot depicts a Node.js application. For example, you could begin with Python:3.11-slim, set CMD ["python", "analysis.py"] as your entrypoint, and install packages from requirements.txt.

Pro Tip: To prevent big files like datasets, cache, or checkpoints from being copied into your image, use.dockerignore. This keeps your builds quick and light.

Step 3: Managing Dependencies Across Stages

There is rarely a single environment in real-world projects. You could:

It is cumbersome and challenging to maintain when everything is installed in a single container. Rather, Docker gives you the option to: Employ multi-stage builds, in which only the necessary tools are added at each stage, or

Divide the project into distinct containers for serving, training, and preprocessing.

For instance:

Because of this, each image is kept compact and effective, and switching or updating one stage is simple without affecting the others.

Important Tip: Use folders like src/ for scripts, config/ for settings, and data/mounted externally to organise your project. This structure expedites rebuilding and works in tandem with Docker's caching system.

Step 4: Running and Orchestrating Multi-Service Workflows

Pipelines for data science are rarely isolated. You might require an API to serve results, a notebook environment for experimentation, and a database for structured data. It can get messy to run these one at a time.

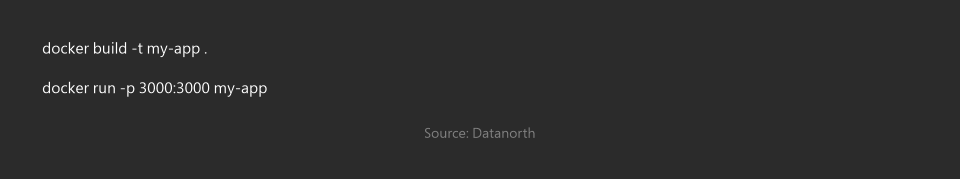

In the past, you would manually create and launch a container using:

The core of containerised workflows is depicted in this image. No matter where they run, it shows how containers are spun up and communicate with one another to maintain consistency.

The main lesson is that although you can manually start containers, Docker really excels at coordinating several services at once. Databases, APIs, and notebooks can all be defined as part of a single workflow using tools like Docker Compose, ensuring that everything functions together under a single command.

Your entire pipeline, from ingesting raw data to serving models, can function flawlessly in a controlled, repeatable setting by using this method.

Step 5: Moving from Local Development to Production

It's simple to run containers on your laptop, but there are additional factors to take into account when scaling them for production:

By following these guidelines, you can make sure that your data science tasks are scalable, secure, and dependable in practical settings.

Why Docker Matters for Data Science

Let’s recap the main benefits:

Common Pitfalls in Docker for Data Science and How to Navigate

1. Large Images: Use multi-stage builds and lightweight base images to avoid large images.

2. Version Conflicts: Lock dependency versions and isolate projects in different containers.

3. Data Management: Mount models and datasets rather than baking them into pictures.

4. Security Risks: Use non-root containers and store secrets in configuration files or environment variables.

5. Debugging Difficulty: For fast fixes, enable logging, health checks, and mount code.

6. Workflow Complexity: To effectively manage multi-service projects, use Docker Compose.

7. GPU Problems: Make sure the drivers are compatible for ML/DL workloads and use NVIDIA Docker.

Advice: Examine Dockerfiles frequently to eliminate unnecessary dependencies and enhance efficiency.

Conclusion

Becoming proficient in Docker is not just a series of commands you have to learn but rather a way of thinking about managing data science projects. Containers provide multiple benefits, including consistency, reproducibility, and scalability, which will result in a more efficient workflow, enhanced collaboration between team members, and a more dependable way of deploying real-world applications.

If you are considering advancing your data science journey, structured learning can also help you develop a more refined skill set. The USDSI® Data Science Certifications are developed to provide professionals with practical, industry-relevant skills to work elegantly with tools like Docker. With the right qualifications and learning, you can feel empowered to modernize your practice, enabling more complex problem solving in a rapidly evolving field.

This website uses cookies to enhance website functionalities and improve your online experience. By clicking Accept or continue browsing this website, you agree to our use of cookies as outlined in our privacy policy.