Manually tagging metadata and tracking SQL lineage is known to be a time-consuming and error-prone task for data engineers. Even though it is very important for compliance and data governance, these tasks require extensive review of datasets, table schemas, and SQL scripts.

Fortunately, there are several large language models (LLMs) such as GPT-4 that offer smarter and more intelligent solutions. In this guide, we will tell you how beginner data science professionals, like data engineers, can learn how to use different LLMs along with popular tools like OpenMetadata, dbt, Trino, and Python APIs to automate tasks like identifying PII or other metadata tagging and lineage tracking for SQL changes.

What is Metadata Tagging and Lineage Tracking?

Meta tagging refers to the process of adding descriptive labels to data assets such as tables, columns, or other files to provide context and offer better discoverability and usability, along with support for data governance.

Metadata can be a set of data that describes what the data is all about, such as the name of the project, the title of a product, or anything else that makes it easier to find and understand data. In short, the purpose of metadata is to help users retrieve information easily and quickly. Thus, users can access the desired data whenever they need it without going through too many steps.

On the other hand, SQL Lineage Tracking is an important practice in data management that helps users track the journey of data from its source to its final form, along with various stages of processing. The purpose of Lineage Tracking is to understand the data’s origin and how it has been used over time and thus help the data professionals get insights into the quality, integrity, and reliability of data.

What is the Importance of Metadata Tagging and Lineage Tracking Matter?

Before we get into the implementation part, let's understand why metadata tagging and SQL lineage tracking are important. While metadata tagging labels the data elements, such as marking columns as PII, financial, public, etc., and supports compliance with regulations like GDPR and HIPAA, the SQL lineage tracking is used to map the flow of data across different systems, from source tables to final outputs which ultimately enhance transparency and traceability.

Together, both of these practices offer great advantages to improve data governance by:

They ultimately serve as a great foundation for responsible and efficient data processing.

Tools to be Used

Here are the following tools you will be using to build an automated pipeline:

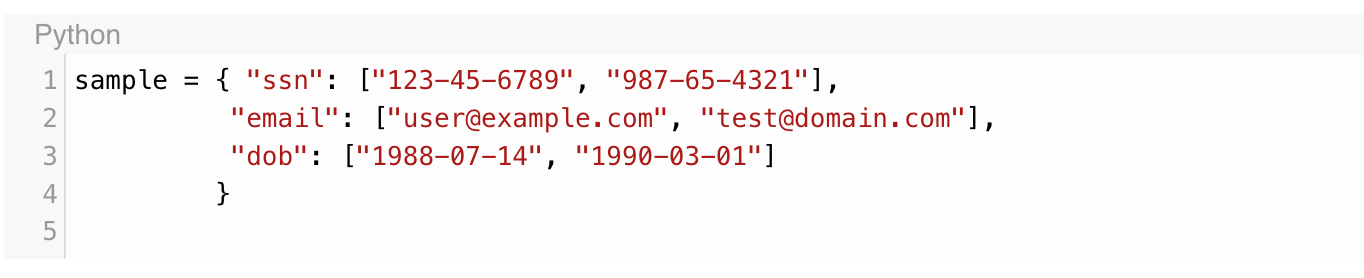

Step 1: Sample Column Data for Context

The first step in this process is to extract a sample of your table’s data so that models can understand column content.

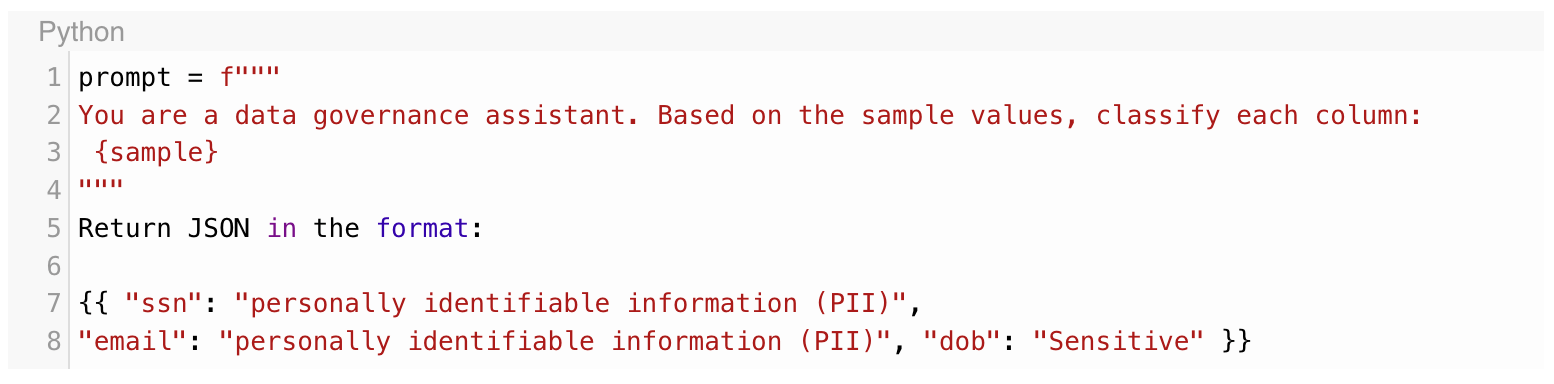

Step 2: Send it to GPT-4 for PII Classification

The next step is to construct a prompt that will classify your columns using the LLM

Make an API call by using OpenAI’s Chat API as follows:

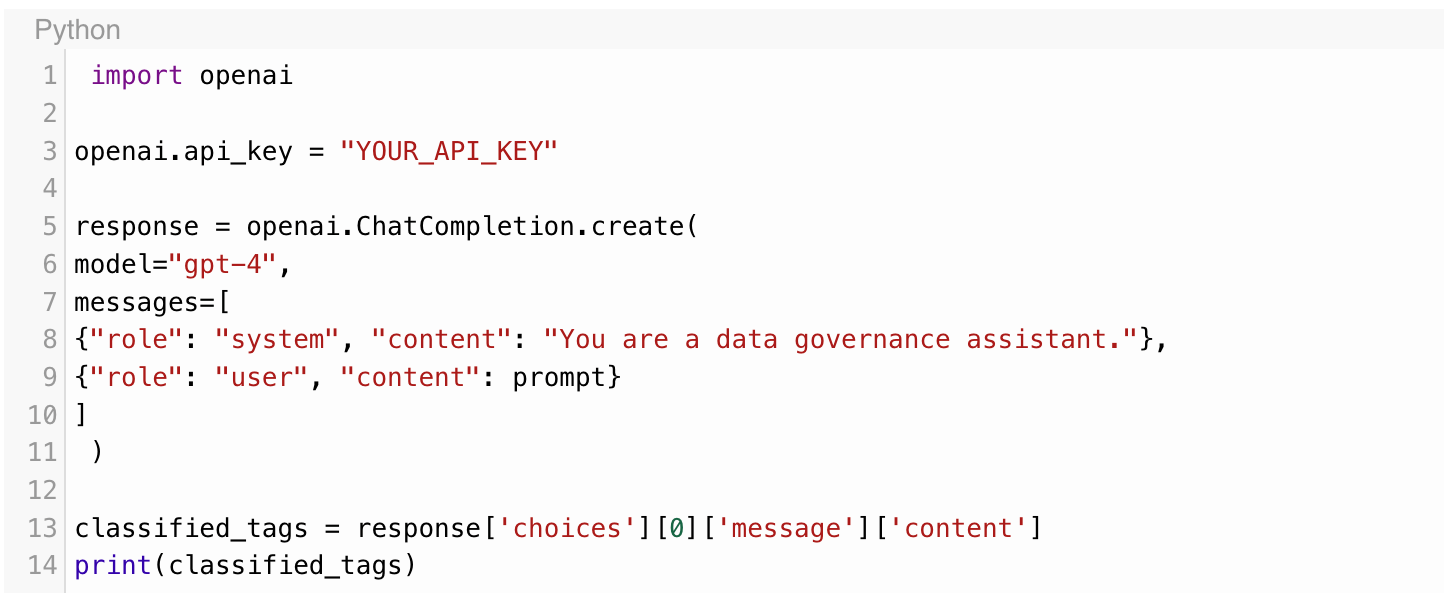

Step 3: Push Tags into OpenMetadata

After having the classification results, you need to push the tags into the metadata catalog

Step 4: Tracking SQL Lineage Using LLMs

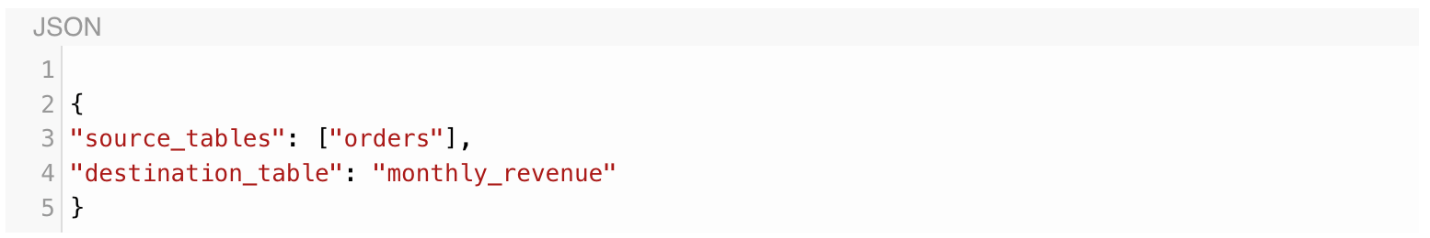

Next, you have to track lineage by extracting the flow of data in SQL statements. So, take a sample query:

![]()

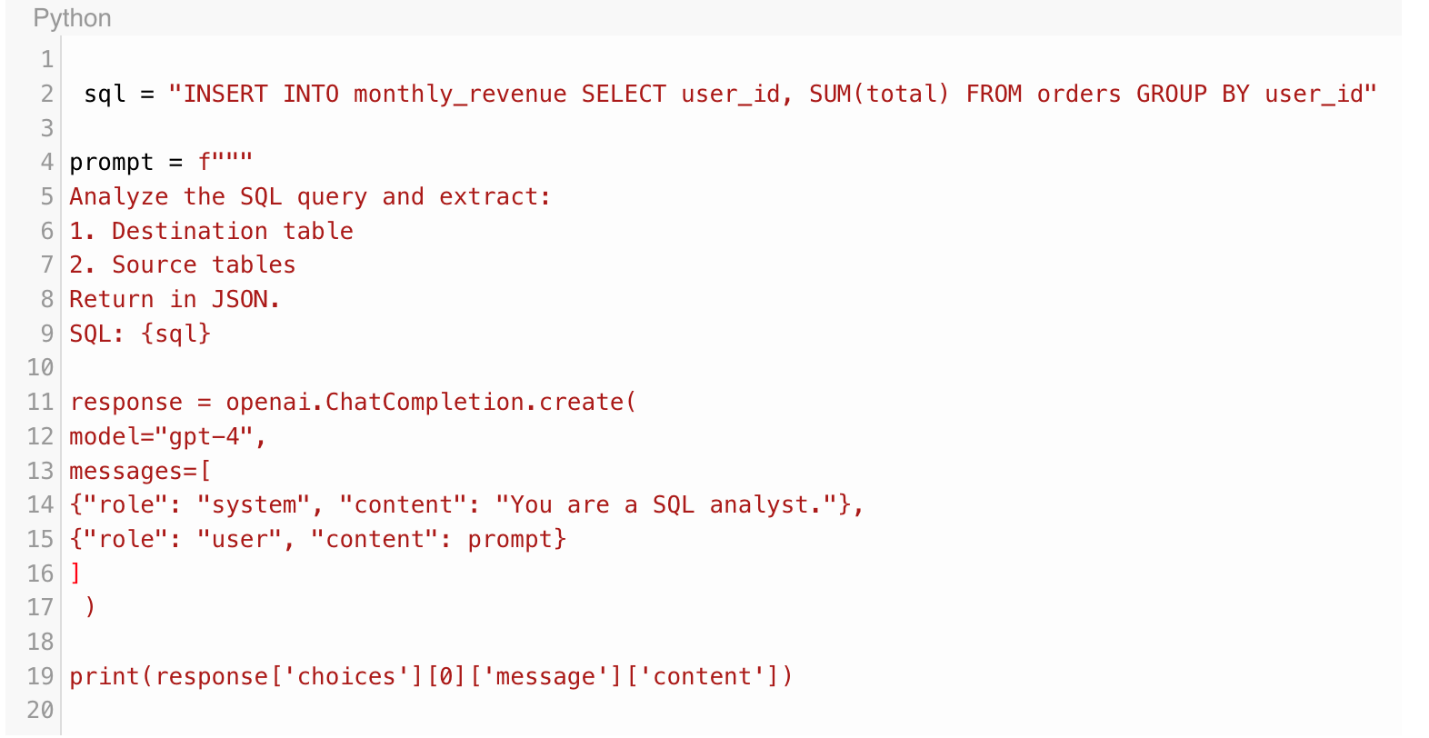

And, feed it to GPT-4 for parsing:

Expected Output:

Step 5: Add PII Tagging to CI/CD Pipelines

To avoid sensitive data leaks or missed tags in production, integrate LLM-based tagging into your CI/CD pipelines using tools like GitHub Actions for automated enforcement.

Things to Consider!

Though this method can be quite effective in automating metadata tagging and lineage tracking, there are a few things data engineers must pay attention to:

Summing up!

The large language models (LLMs) such as GPT-4 or anything else can be really helpful in automating data governance tasks that once demanded manual labor. LLMs can be your great assistant and make your metadata layer smarter, scalable, and audit-ready, for all kinds of tasks, from personally identifiable information (PII) detection to metadata tagging to SQL lineage tracking.

You can start with small steps and a small sample first. Start by tagging one table and visualizing one SQL flow. Then, you can implement it in your metadata platform or CI/CD workflows.

So, we can conclude how automation can help even beginner data engineers bring their data catalogs by using minimal code and the right APIs and saving hours of manual work.

This website uses cookies to enhance website functionalities and improve your online experience. By clicking Accept or continue browsing this website, you agree to our use of cookies as outlined in our privacy policy.