Although many analytics practitioners are recognizing the usefulness of AI capabilities, the reality for most data practitioners’ day to day has changed little. The 2025 State of the Data Analyst Report, published by Alteryx, reported that 76% of analysts stated they still rely on spreadsheets for basic work, which includes core activities such as data cleaning and data preparation. Even more amazing was that nearly 45% of analysts were reporting having spent more than six hours each week managing messy and inconsistent data before conducting actual analysis.

Pandas has been the main library for cleaning and working with tabular data for quite some time. However, datasets are growing larger, and data pipelines are becoming more complex. As a result, pandas alone are often simply no longer enough. In this regard, modern data processing libraries, including Dask, Polars, and DuckDB, are changing the landscape and advancing data engineering workflows, data science workflows, and data analytics processes beyond pandas’ products while providing speed, scalability, and efficiency.

Pandas is Reliable, But It Has Its Limits

Pandas has become a foundational package with respect to data science and engineering because of its power and general functionality. It offers:

Given that pandas is built to complete everything in memory and uses a single processing core, its performance can start to decline when:

In these situations, other libraries that are designed to deal with data workflows that are larger and potentially more complex may make for a better option.

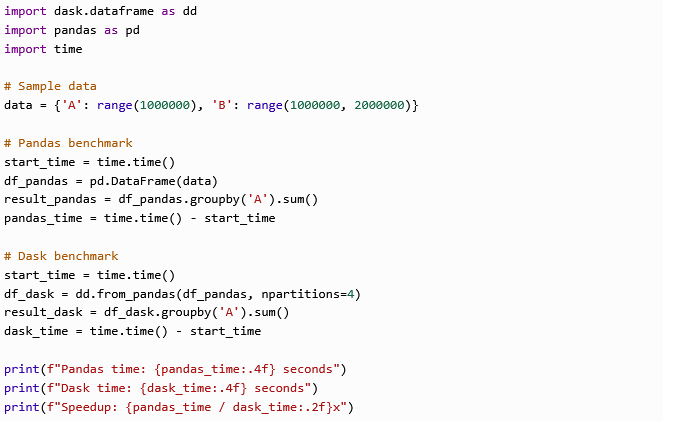

Dask: For When Your Data is Too Big for Pandas

Dask builds the pandas interface into one that supports datasets larger than memory and parallel computing. It enables you to perform operations in chunks and scale operations to multiple cores or even to a cluster.

The strengths of Dask:

Example using Dask:

Output

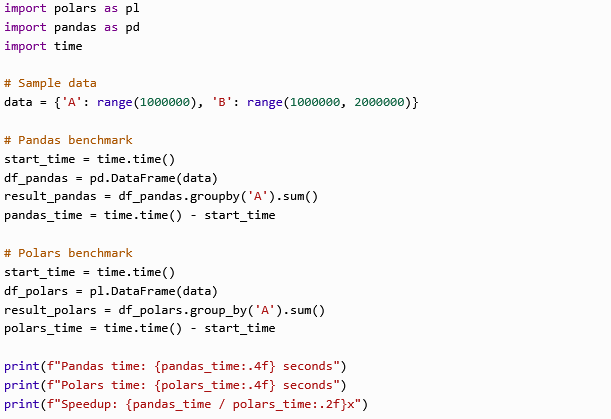

Polars: Speed and Efficiency for Large Datasets

Polars is a new DataFrame library that is developed in Rust and built on top of Apache Arrow. It supports both eager and lazy execution. Polars was built for performance and efficiency.

Reasons Polars is becoming popular:

Example using Polars:

Output

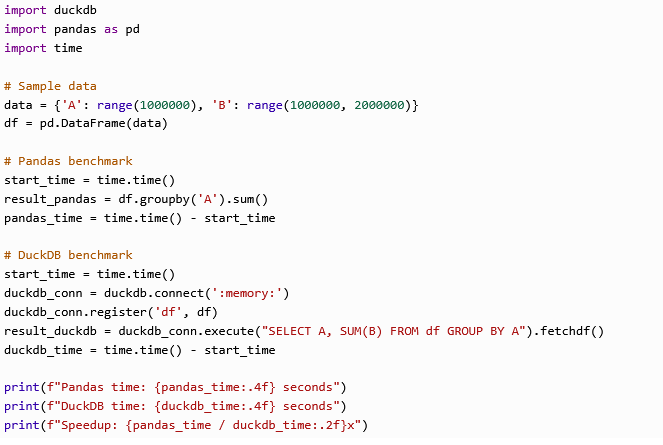

DuckDB: SQL for Fast Analytics Without Extra Setup

DuckDB allows powerful SQL analytics to be integrated into your workflow without having to install a separate database server. It's designed for in-process analytics, like SQLite is, but tweaked for analytical queries.

When to use DuckDB:

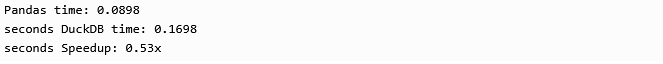

Example using DuckDB:

Output

Modin: An Easy Way to Make Pandas Run Faster

If your current pandas code is slow and you don't want to rewrite everything, Modin can help you. Modin is capable of distributing pandas’ operations across all of your CPU cores with only the change of a one-line import.

The advantages of Modin:

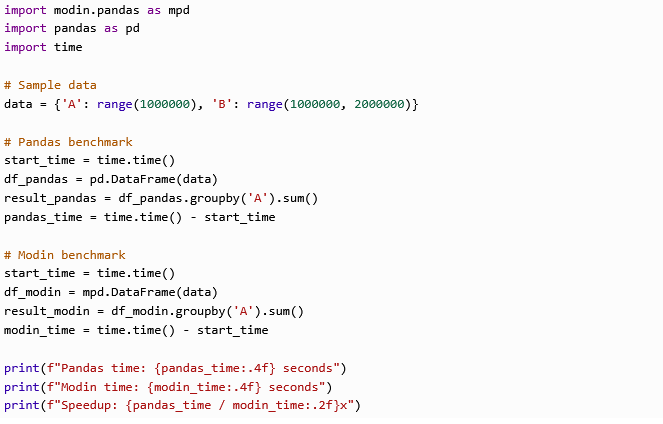

Example using Modin:

Output

FireDucks: Speed Up Pandas Without Changing Code

FireDucks is a modern, JIT-compiled DataFrame library that fully implements the pandas API. It has a multithreaded backend, supports lazy execution, and does all of the optimization automatically with no changes to your code.

Key Benefits:

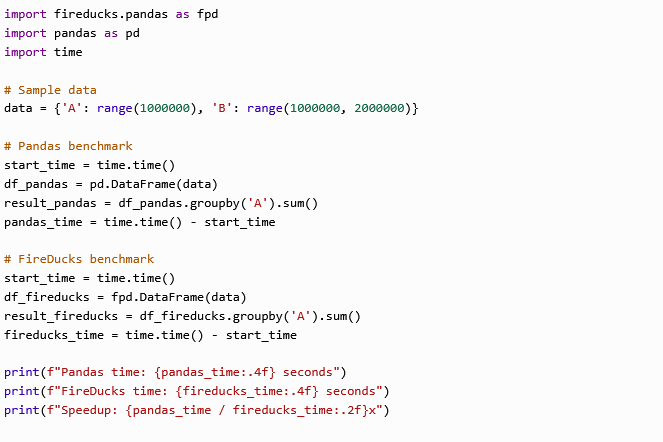

Example using Fireducks:

Output

Conclusion

The Python data ecosystem has expanded well beyond pandas, providing data professionals meaningful tools to use while working with big and complex datasets. The likes of Dask, Polars, DuckDB, Modin, Datatable, etc., help make extension possible without trying to replace the experience of using pandas.

For professionals in data science and data engineering, knowing when to use these tools is important in developing workflows that can scale and be trusted. There are trusted resources, passions for learning, and certifications, such as those associated with USDSI®, that can help keep you up to date in this ever-changing space.

This website uses cookies to enhance website functionalities and improve your online experience. By clicking Accept or continue browsing this website, you agree to our use of cookies as outlined in our privacy policy.