With data science deeply integrated into the roots of business operations, the importance of selecting the right data platform has never been more important than it is today.

With the right data platform, organizations can significantly enhance their innovative capabilities, gain never-before-seen insights, and stay competitive in the evolving business world.

Snowflake and Databricks are the two data warehousing platforms that dominate the modern data ecosystem. Both of them offer powerful solutions to handle big data, analytics, and machine learning. But they cater to different needs and have their own strengths to offer.

This read explores the difference between these giants, comparing their architectures, use cases, performance, and other factors to help you make the right choice.

Understanding the Platforms

What is Databricks?

Databricks is a unified data analytics platform built on Apache Spark. It was founded by the creators of Spark and offers an open and collaborative environment for data engineers, data scientists, business analysts, and other data science professionals.

Databricks mostly emphasizes data engineering, machine learning, and real-time analytics and has a strong foundation in open-source technologies like Delta Lake, MLflow, and Apache Spark.

It also offers excellent features for end-to-end data processing, such as ETL (extract, transform, load) and advanced analytics, and therefore, it is great for data science workflows.

What is Snowflake?

Snowflake, on the other hand, is a cloud-based data warehousing platform. It is popular and widely used for its simplicity, scalability, and performance. It can support structured and semi-structured data and is optimized for SQL analytics.

Snowflake clearly separates storage and compute capabilities, which helps with independent scaling of resources. It is known for its easy user interface, faster querying capabilities, and built-in data sharing features.

Comparing Databricks and Snowflake

Databricks Architecture

Databricks works on a Lakehouse model and includes the elements of data lakes (low-cost storage, flexibility) with those of warehouses (performance, ACID transactions).

Key components of Databricks Include:

Databricks is best suited for ETL, data science, and machine learning workloads because of its flexible and open architecture.

Snowflake Architecture

Snowflake is basically a data warehouse solution. It separates the three elements -

Important features of Snowflake:

Snowflake is known to be best for BI, reporting, and SQL-based analytics.

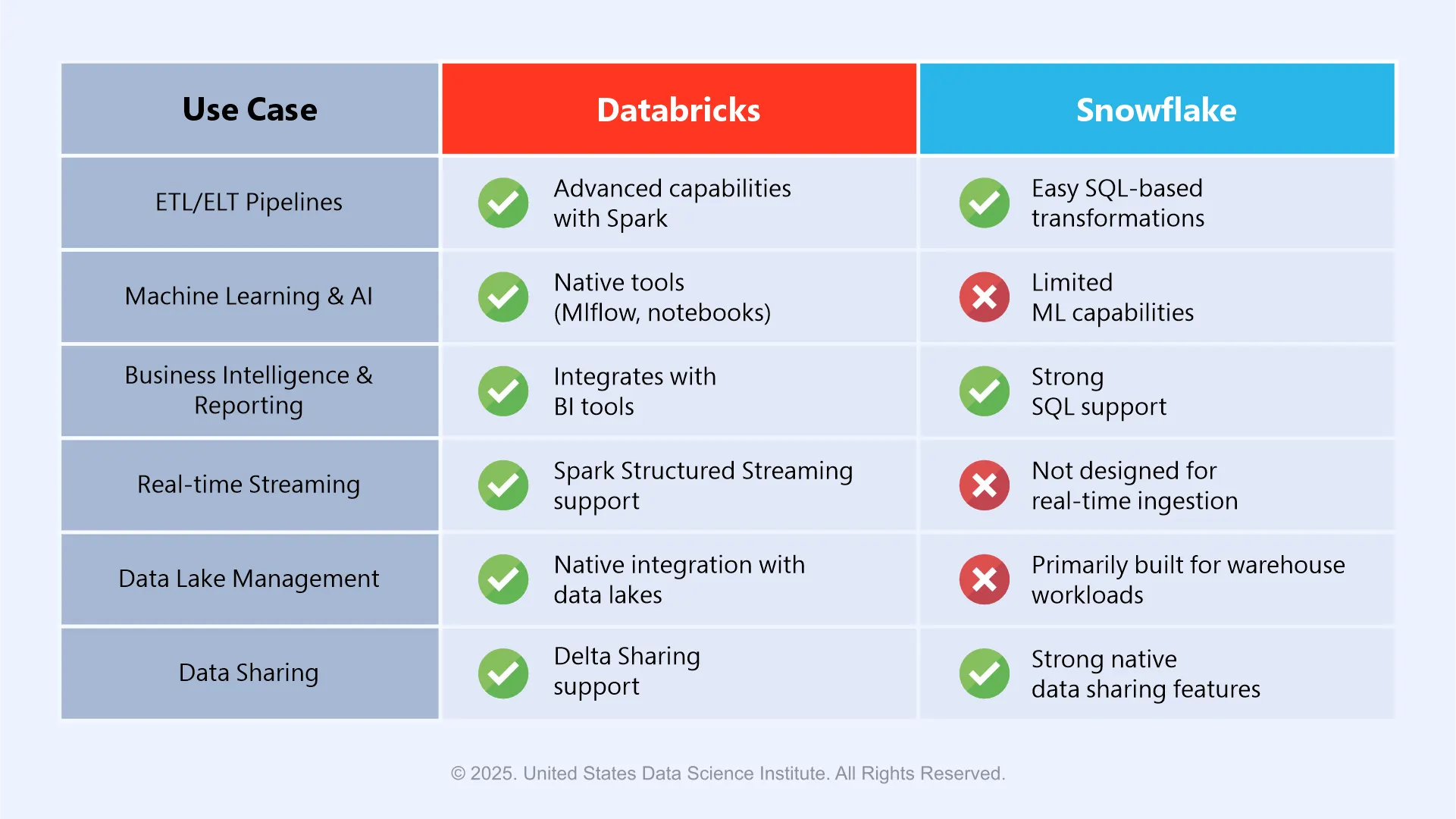

Use Cases

Performance

Databricks Performance

It has been optimized for big data workloads and offers greater support for parallelism and in-memory computing via Spark. Photon is their native vectorized execution engine, which significantly improves Databricks’ SQL performance.

Snowflake Performance

Snowflake has been designed for fast SQL analytics and provides greater performance for structured and semi-structured data. Because of its automatic optimization and scaling features, it offers a consistent query performance.

But it relies on integration with external services like SageMaker or DataRobot for complex transformations or ML workloads, and therefore adds overhead and becomes quite complex.

Scalability

Both these data analytics and data warehousing platforms have excellent scalability, however, with different approaches.

Pricing

Snowflake runs on a consumption-based pricing model in which the data science professionals have to pay separately for storage and compute. This is a flexible and predictable cost model for users.

On the other hand, Databricks comes with different pricing models, such as pay-as-you-go and reserved pricing for interactive clusters and dedicated clusters respectively. This can vary based on the size of the Spark cluster and the duration of your project.

Snowflake or Databricks: Which one to choose?

Both Snowflake and Databricks are powerful platforms, but choosing the right one requires careful consideration of your needs and project requirements.

The following criteria can help you make the right choice:

Nature of Workloads

Choose Snowflake if your organization is focused on business intelligence, reporting, and SQL-based analytics because it is better optimized to run analytical queries on structured data with little overhead.

Databricks would be a better choice if you want to perform data engineering, machine learning, or real-time data streaming. This is because of its Apache Spark foundation and its support for advanced data science workflows.

User Skillset

Snowflake is a better choice for teams having strong knowledge and expertise in SQL and who can easily work with data using a more traditional data warehousing interface.

Databricks is better suited for organizations where data science professionals have enough experience in distributed computing, Python, or Scala. If they are comfortable working in a notebook-based environment, then Databricks can be suitable.

Data Complexity

Snowflake serves as an easy-to-use and scalable solution for structured and semi-structured data. it can also be easily integrated with BI tools such as Tableau and Power BI.

If you are working on unstructured data or scenarios that require complex data transformation, then Databricks will provide a more flexible solution where you can work with a wide variety of data formats.

Machine Learning and AI

If your business is inclined towards machine learning and AI, then Databricks will be the right choice as it offers a comprehensive solution because of its integration with ML libraries and support for collaborative, interactive analysis.

Snowflake is mostly a data warehouse platform. It can integrate with external ML tools, and that should be enough if your organization deals with machine learning only as a small part of the workload.

Cost

Snowflake offers greater cost predictability for data warehousing workloads, especially when your usage involves periodic analytical queries. Its multi-cluster compute model and the ability to automatically suspend and resume resources help manage and optimize costs effectively.

Databricks, however, can incur unpredictable costs if compute clusters remain active for extended periods during ETL or machine learning tasks. However, it excels in flexibility and scalability for high-throughput data processing, which can make it more cost-effective for specific data engineering and advanced analytics workloads.

Conclusion

Both Databricks and Snowflake are great data analytics platforms, with each being powerful in different domains. The choice between the two ultimately comes down to your organization’s data maturity, technical expertise, primary workloads, and long-term strategy.

For machine learning, data science, and real-time data engineering, Databricks provides a flexible, open, and powerful platform. For analytics, reporting, and easy scalability, Snowflake is hard to beat.

Some enterprises even use both—Databricks for transformation and ML, and Snowflake for BI and reporting—highlighting that in the world of modern data platforms, integration often trumps competition.

This website uses cookies to enhance website functionalities and improve your online experience. By clicking Accept or continue browsing this website, you agree to our use of cookies as outlined in our privacy policy.